How I Prototyped a Whale Detection AI in One Afternoon

A tiny proof-of-concept built on synthetic whale songs — and why “good enough” prototypes matter.

A few weeks ago, I was reading about ship strikes on whales. The numbers are hard to ignore:

20,000+ whales killed by ships annually (International Whaling Commission)

One whale death every 26 minutes from vessel collisions

North Atlantic right whales down to just 340 individuals - extinction possible within decades

80% of ship strikes could be prevented with better detection technology

Here's what really motivated me: we have the technology to solve this. We just need better, more accessible whale detection systems deployed at scale. I wondered if AI could help tackle a problem like this. Unsurprisingly, building a fully deployable system is a huge undertaking but I wanted to tinker and learn, so I treated it as a weekend-style project to see what was possible in a few hours.

Sources: NOAA Marine Mammal Ship Strike Database, International Whaling Commission Ship Strike Report, North Atlantic Right Whale Consortium

Whale detection technology isn’t science fiction, several commercial and pilot systems already exist. Woods Hole Oceanographic Institution has developed thermal-imaging cameras with AI that can spot whales in real time from ships. The Whale Safe system combines acoustic buoys, visual sightings, and predictive models to send AIS alerts to vessels in high-risk areas. Companies like FarSounder are using forward-looking sonar to detect whales underwater before a collision occurs.

My EchoWhale experiment isn’t meant to compete with these, it’s a small, hands-on way to understand how such systems might work.

One Afternoon, Claude, and Me

I hadn’t touched neural networks in about a decade. My Python was patchy. I’d never processed audio before. So I opened my trusty notebook, sketched some ideas, fired up Claude, and started asking questions.

By the end of the afternoon, we had a small, working thing:

A basic neural network that could recognise synthetic whale calls.

An audio pipeline to turn sounds into spectrograms.

Some simple noise handling and visualisations.

It was all built on fake data we generated mathematically. In other words, the AI had never heard a real whale, only our computer-generated versions.

In controlled, lab-like conditions, it got everything right. In the real ocean, it probably wouldn’t work as is. But the point was that I could go from zero to something in a few hours and learn a lot along the way.

What I Learned

I also have to say, using Cursor made this whole thing so much easier. Setting up Python, installing libraries, and getting everything running used to feel like a mini-project in itself. With Cursor, it was smooth, and I could focus on actually building rather than wrestling with my environment.

I used Cursor to actually write and run the code, and Claude as my endlessly patient co-pilot for all the “wait, explain that again” moments. Keeping the chat separate from the editor meant I could tinker in peace without my code window turning into a wall of questions.

And let’s be honest: I spent a lot of time asking Claude to explain things to me… again and again. In the past, that’s the kind of thing I’d text my good friend Tom about (“Why won’t my code work?!”). Now, instead of pestering him, I could get the same patient explanations on demand and try things out right away.

Some of these libraries and tools felt daunting, but having that instant back-and-forth made me a lot more confident. By the end of the afternoon, I actually understood how the pieces fit together.

Building teaches faster than reading. I learned more about modern AI in one afternoon than I had in years of passively reading about it.

“Good enough” is good enough to start. Synthetic whale calls aren’t the real thing, but they were enough to explore the problem.

AI-assisted coding is like having a patient tutor. Claude handled the boilerplate and explained things as we went, leaving me to focus on the shape of the solution.

Tinkering lowers the barrier. You don’t need to be an expert to start. You just need curiosity and a free afternoon.

The next steps for something like this would be huge: gathering real hydrophone data, testing in noisy marine environments, partnering with researchers and shipping companies. That’s years of work and collaboration.

EchoWhale

Let's be realistic about what EchoWhale actually is:

Trained on synthetic data - We synthetically generated calls for blue, humpback, and fin whales and trained a classifier on those labels.

Controlled test environment - perfect lab conditions, not chaotic ocean reality

Afternoon project scope - proof-of-concept, not production system

Academic exercise - demonstrates feasibility, needs real-world validation

Here’s the output:

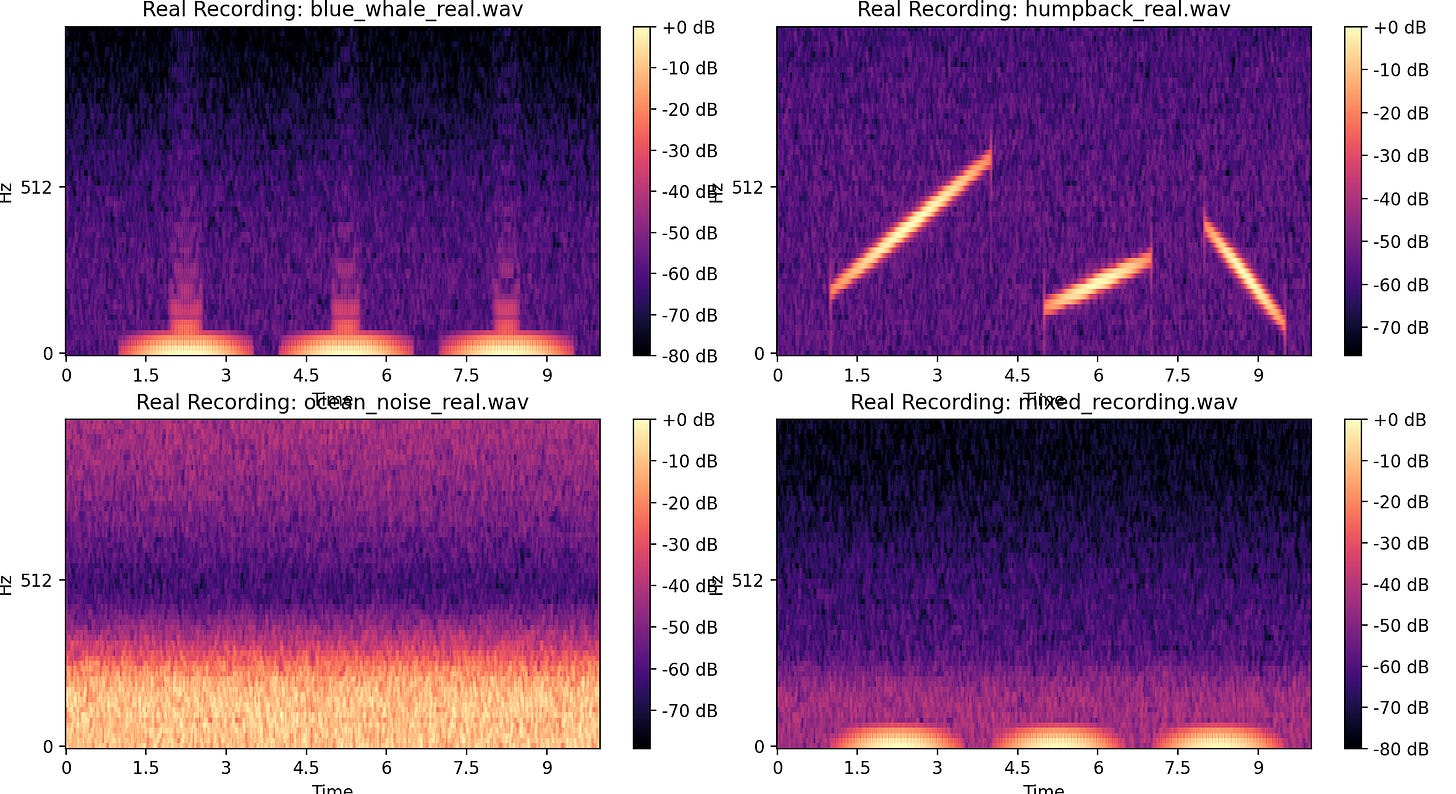

Below is a snapshot of what the AI “sees” when it listens to real whale recordings. Each panel is a spectrogram that shows how sound energy changes over time and across different frequencies.

Top left: Blue whale call – You can see the deep, rumbling pulses spaced a few seconds apart, bright at the very bottom of the frequency range.

Top right: Humpback whale call – These appear as sweeping diagonal lines, climbing in pitch over time — humpbacks are famous for these melodic, rising notes.

Bottom left: Ocean noise – A fuzzy, continuous band of energy, with no clear “call” patterns, representing background underwater sound.

Bottom right: Mixed recording – A combination of whale calls and background noise, showing how the patterns blend in more realistic, noisy conditions.

In all four, brighter colours mean louder sounds at those frequencies. The AI learns to distinguish the clear patterns of whale calls from the messy texture of ocean noise which is a crucial step toward detecting whales in the wild.

Why Share This?

Because I think more of us should feel free to tinker, especially with conservation problems. The tech is here. The data exists. And the leap from “I can’t” to “I built a thing” might be smaller than you think.

If you’ve been sitting on an idea, waiting until you feel ready maybe just start. Even if it’s messy and nowhere near perfect.

Technical stuff

Skip ahead if you’re not into code

Technical Stack & Tools

Development Environment:

Python 3.13 in virtual environment

Cursor

Claude as AI coding assistant

Git version control → GitHub deployment

Core Libraries:

PyTorch - Neural network framework

librosa - Audio processing & mel-spectrogram generation

NumPy - Mathematical operations

Matplotlib - Data visualization

SciPy - Signal filtering & processing

AI Architecture

Neural Network Design:

Convolutional CNN for spectrogram analysis

Conv2D layers with ReLU activation

MaxPooling for feature reduction

Dropout layers for regularization

Data Generation Approach

Synthetic Whale Calls:

Blue whale: Low-frequency pulses (15-30 Hz) with harmonics

Humpback: Frequency sweeps (80-400 Hz) with modulation

Ocean noise: Filtered random signals mimicking real ocean acoustics

Mixed scenarios: Whale calls + background noise

Audio Processing Pipeline:

Convert audio to mel-spectrograms

Normalize frequency ranges (0-1000 Hz focus)

Feature extraction using librosa

Real-time visualization of AI decision-making

Development Method: Real-time collaboration between human and AI coding assistance, with iterative building and testing throughout the afternoon.

What's Missing for Production:

Real ocean recording validation (we used mathematical whale call simulations)

Robustness testing in actual marine noise environments

Performance optimization for real-time processing requirements

Integration with maritime navigation systems

Regulatory compliance and safety certifications

Get the complete EchoWhale code on GitHub and start building your own conservation technology.

Ready to build your conservation AI project? The ocean needs more builders like you. Start this weekend.