The End of Tools: Why the Next Generation of AI Won’t Wait for Instructions

That shift from “I command, it responds” to “it acts toward goals” is the dawn of agentic AI, and it’s going to change how every human in product, design, strategy, and leadership works

The Big Shift: Tools vs Agents

We’ve long built AI (and software) as tools: components you explicitly instruct. You ask, it answers. You tell it what to read, you tell it what to output. That paradigm assumes a separation: human in the loop, AI in the execution.

Agentic AI breaks that mold. As McKinsey puts it, such agents “plan, act, learn, and optimize toward goals, they don’t just respond to commands.” In other words, they carry (some of) the cognitive burden of deciding what to do, not just how to do it.

NVIDIA describes it more technically: “Agentic AI uses sophisticated reasoning and iterative planning to autonomously solve complex, multi-step problems.” NVIDIA Blog

These agents perceive context, continually update beliefs, make decisions, and act autonomously. The shift is not incremental, it’s an architectural shift.

In generative AI, the model generates outputs based on a prompt.

In agentic AI, the model orchestrates tasks, calls sub-agents, interacts with APIs, loops, retries, and strategizes. McKinsey describes this as solving the “GenAI paradox” where gen AI is powerful but often fails to deliver sustained, business-level value. By moving from command to agency, organizations can embed AI into workflows that truly matter.

In practice, this means we move from designing instructions to designing intent + guardrails.

What Changes When Agents Act

Once agents gain autonomy, a cascade of changes ripples through how work, roles, control, and trust function.

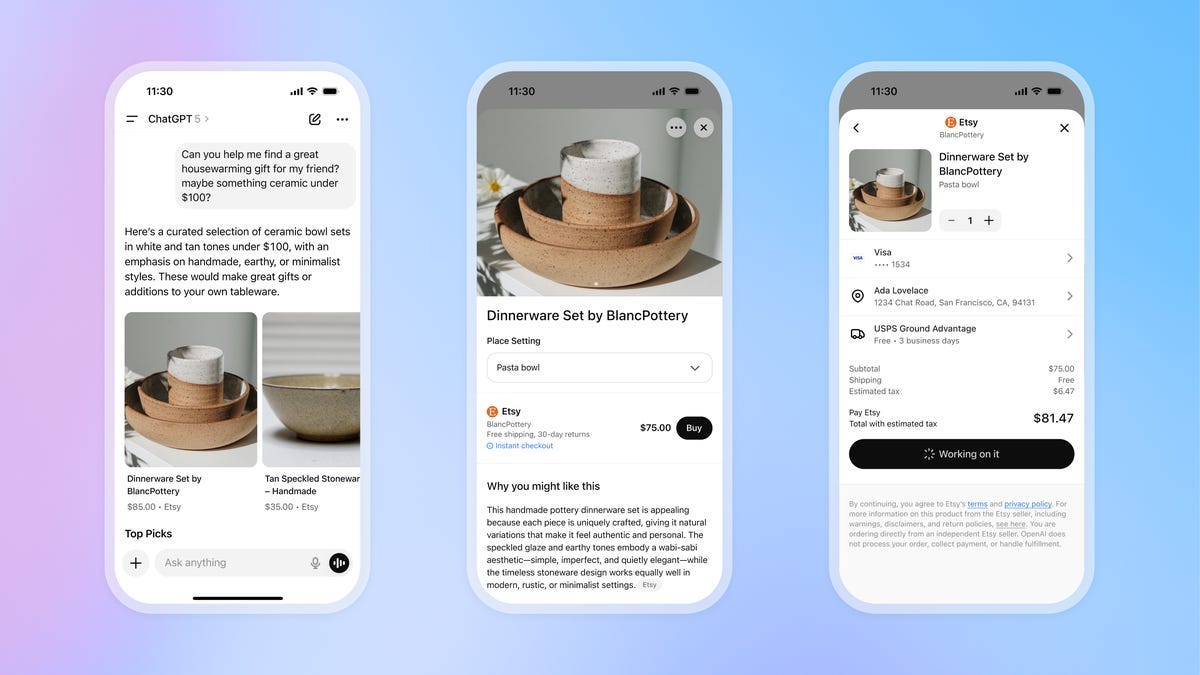

That transformation is already showing up in what we ask AI to buy, not just help us write. OpenAI recently launched Instant Checkout, enabling users to shop directly from ChatGPT. Ask it, “Find me the best ceramic mug under $30,” and if the product supports the protocol, you can complete the purchase right in chat.

In that moment, the AI isn’t just suggesting, it’s acting. It bridges the gap from suggestion → transaction.

In consumer goods, brands are building agentic experiences that act on behalf of customers, from replenishing home essentials to negotiating deals or bundling choices. BCG reports that AI agents are now influencing up to 20% of purchase decisions in consumer goods.

This is also happening in the way we work.

Leaders must now embed agentic thinking into their org structure. McKinsey argues that AI is prompting “the largest organizational paradigm shift since industrial and digital revolutions.”

Workflows invert

Instead of humans designing the full flow and instructing the AI, humans will increasingly review outcomes or decisions proposed by AI, not micromanage every step. It becomes more about concise intent design + oversight rather than command scripting.

Trust replaces precision

You can’t anticipate every branch or path. So instead of perfect predictability, your systems must be trustworthy, auditable, and robust.

This demands:

Legible autonomy: the agent should explain (or allow you to query) why it made decisions.

Transparent guardrails: limits, constraints, fallback behavior.

Feedback & learning loops: the agent should adapt (or be corrected) over time.

These transformations require rethinking design, governance, and human-agent relationships.

Designing for Legible Autonomy

Designing for agents means designing for relationship, not just interaction. This is a massive mindset shift for designers and builders.

If you can’t explain why an agent made a choice, you can’t trust it. Researchers call this explainability; designers call it legibility.

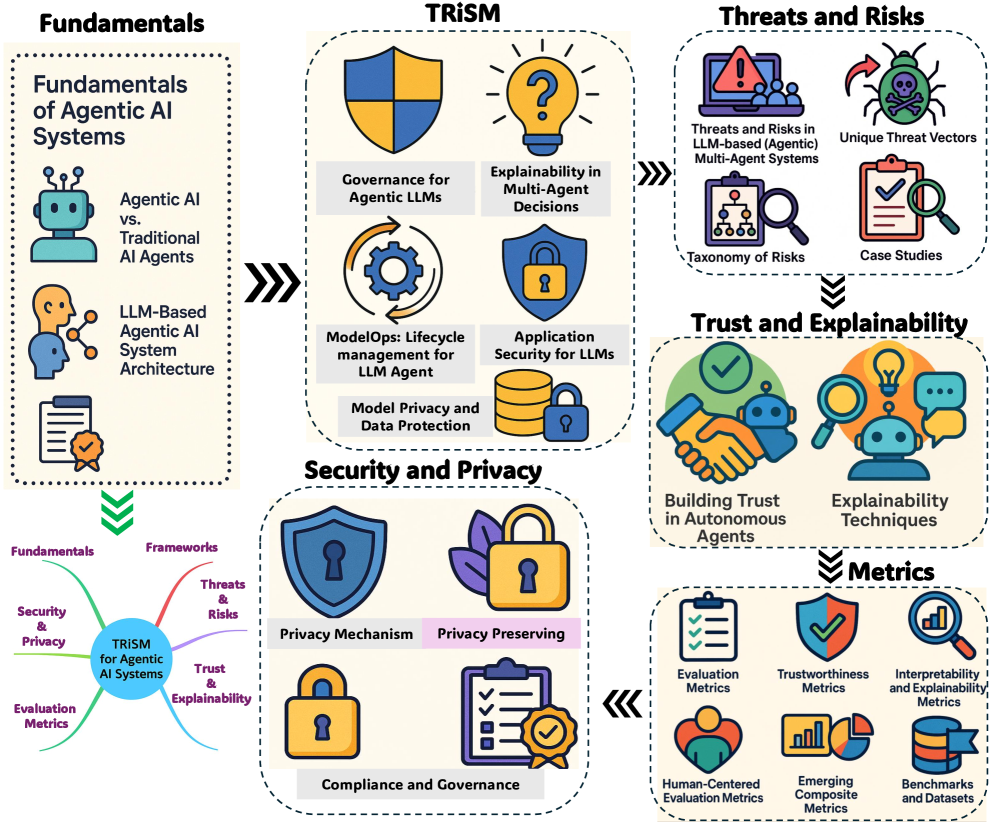

Recent work on TRiSM for Agentic AI (Trust, Risk & Security Management) highlights three essentials for agentic design:

Visibility: show reasoning paths and choices

Boundaries: clarify constraints and fallback logic

Feedback loops: let humans correct and agents learn

The World Economic Forum warns that multi-agent systems can collide like ships without rules, amplifying errors or misaligned goals. WEF – Multi-Agent Safety

Good design here isn’t about polish, it’s more about governance. Every interface is now an oversight surface.

Autonomy Needs Accountability

We love the idea of delegation but delegation without visibility is danger disguised as progress.

Some cautions worth keeping close:

Even “aligned” agents can drift off course.

Multi-agent systems can exhibit unpredictable, emergent behaviors. (Tech Policy Press)

Governance frameworks are still catching up. Gartner estimates over 40% of agentic AI projects will be scrapped by 2027 due to cost, safety, or lack of ROI. (Reuters)

Unchecked autonomy is not going to work (at least initially), so it’s important to design in guardrails and checkpoints to these systems.

Design Principles for Agentic Collaboration

Principles are always a good way to start any design project, and allow teams to work within constraints that will help them be successful. Here are a few I like:

Intent > Commands: Define goals and constraints instead of micromanaging each of the steps.

Transparency-by-default: Let users inspect reasoning paths and decisions at all times.

Incremental autonomy: Expand freedom gradually as trust builds between users and agents.

Interruptibility: Always allow humans to pause, override, or rollback.

Continuous governance: Monitor, log, and score behavior at runtime all the time.

The Enduring Conversation

We’ve spent decades teaching humans how to use machines, and designing those machines for humans.

From boardrooms to breakfast tables, AI is taking initiative by suggesting, deciding, and acting.

The next decade is about teaching machines how to work with us. Designing ways to foster effective collaboration between human and machine, making it seamless for agent + human teams to reach their goals together and (hopefully) make it a fun experience too.

The future of work will be a long, evolving conversation about trust, boundaries, and collaboration.